|

I am currently a Senior Researcher at Vivix AI. I obtained my Ph.D. in 2023 from the MMLab at The Chinese University of Hong Kong (CUHK), where I was fortunate to be advised by Prof. Hongsheng Li and Prof. Xiaogang Wang. Prior to that, I earned my bachelor's degree from Nanjing University in 2019. Email / Google Scholar / Github / CV |

|

|

My current research interests focus on multimodal models, diffusion models, and computational photography. I am passionate about conducting research with real-world impact, addressing pressing technological challenges. Most of my publications have been successfully integrated into commercial products. |

|

Yunpeng Liu, Boxiao Liu, Yi Zhang, Xingzhong Hou, Guanglu Song, Yu Liu, Haihang You CVPR, 2025 arXiv / supplement The study introduces the Curriculum Consistency Model (CCM) to address inconsistent learning complexity in Consistency Distillation for diffusion and flow models, balancing complexity via a PSNR-based curriculum. |

|

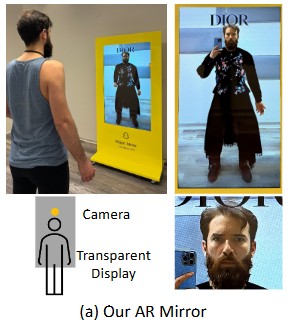

Jian Wang, Sizhuo Ma, Karl Bayer, Yi Zhang, Peihao Wang, Bing Zhou, Shree Nayar, Gurunandan Krishnan SIGGRAPH Asia, 2024, (Journal) (Best Paper Award) arXiv / supplement / code A novel AR mirror system that allows a seamless, perspective-aligned user experience, which is enabled by placing the camera behind a transparent display. |

|

Xiaoyu Shi, Zhaoyang Huang, Fu-Yun Wang, Weikang Bian, Dasong Li, Yi Zhang, Manyuan Zhang, Ka Chun Cheung, Simon See, Honwei Qin, Jifeng Dai, Hongsheng Li SIGGRAPH, 2024 arXiv / code We introduce Motion-I2V, a novel framework for consistent and controllable image-to-video generation (I2V). In contrast to previous methods that directly learn the complicated image-to-video mapping, Motion-I2V factorizes I2V into two stages with explicit motion modeling. |

|

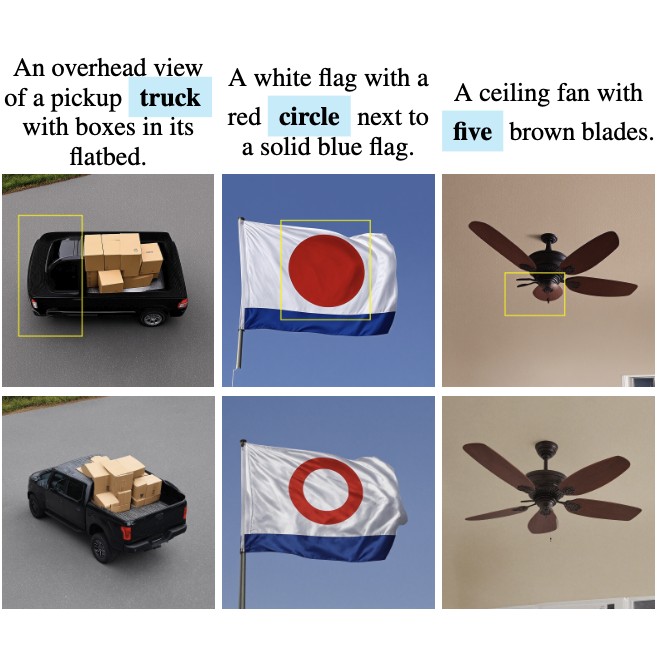

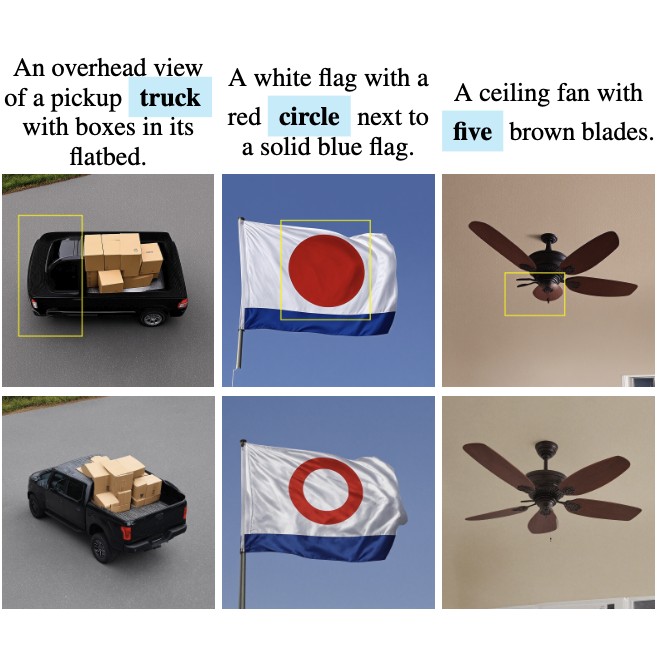

Yi Zhang, Xiaoyu Shi, Dasong Li, Xiaogang Wang, Jian Wang, Hongsheng Li NeurIPS, 2023 arXiv / code / project A unified conditional framework based on diffusion models for image restoration. |

|

Yi Zhang, Dasong Li, Xiaoyu Shi, Dailan He, Kangning Song, Xiaogang Wang, Honwei Qin, Hongsheng Li Arxiv, 2023 arXiv / code A general-purpose backbone for image restoration tasks (e.g. denoising, deraining, and deblurring). A novel kernel basis attention (KBA) module has been proposed to effectively aggregate the spatial information via a series of learnable kernel bases. |

|

Dasong Li, Xiaoyu Shi, Yi Zhang, Xiaogang Wang, Honwei Qin, Hongsheng Li CVPR, 2023 arXiv / code / project We propose a grouped spatial-temporal shift module for effective video restoration. It surpasses previous SOTA methods with only 43% computational cost on video deblurring and denoising. |

|

Dasong Li*, Yi Zhang*, Ka Lung Law, Xiaogang Wang, Honwei Qin, Hongsheng Li IJCV, 2022 arXiv A practical low-light imaging system for burst raw image denoising. It has been deployed to some commercial smartphones. |

|

Dasong Li, Yi Zhang, Ka Chun Cheung, Xiaogang Wang, Honwei Qin, Hongsheng Li ECCV, 2022 arXiv / code Learning degradation representations for blurry images. The learned degradation representation shows pleasing improvements on both image deblurring and reblurring. |

|

Yi Zhang, Dasong Li, Ka Lung Law, Xiaogang Wang, Honwei Qin, Hongsheng Li CVPR, 2022 arXiv / code / SenseNoise dataset A self-supervised image denoising method using noisy images and the noise model. We achieve SOTA results on both real-world noise and synthetic noises (both point-wise and spatial correlated noise types). |

|

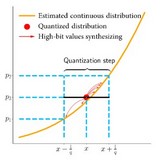

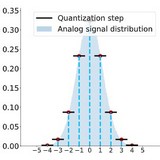

Yi Zhang, Honwei Qin, Xiaogang Wang, Hongsheng Li ICCV, 2021 arXiv / code A method to synthesize "almost the most realistic" raw image noise by sampling directly from the real noise distribution. We also found existing comparisons of noise synthesis methods are based on inaccurate noise parameters. |

|

Zhen-Jia Pang, Ruo-Ze Liu, Zhou-Yu Meng, Yi Zhang, Yang Yu, Tong Lu AAAI, 2019 (Oral) arXiv / demo We achieve over 93% winning rate of Protoss against the most difficult non-cheating built-in AI (level-7) of Terran, training within two days using a single machine with only 48 CPU cores and 8 K40 GPUs. |

|

|

|

|

|

|

|

The website template was borrowed from here.

|